Cloudflare’s 12th Generation servers — 145% more performant and 63% more efficient

Cloudflare is thrilled to announce the general deployment of our next generation of servers — Gen 12 powered by AMD EPYC 9684X (code name “Genoa-X”) processors. This next generation focuses on delivering exceptional performance across all Cloudflare services, enhanced support for AI/ML workloads, significant strides in power efficiency, and improved security features.

Here are some key performance indicators and feature improvements that this generation delivers as compared to the prior generation:

Beginning with performance, with close engineering collaboration between Cloudflare and AMD on optimization, Gen 12 servers can serve more than twice as many requests per second (RPS) as Gen 11 servers, resulting in lower Cloudflare infrastructure build-out costs.

Next, our power efficiency has improved significantly, by more than 60% in RPS per watt as compared to the prior generation. As Cloudflare continues to expand our infrastructure footprint, the improved efficiency helps reduce Cloudflare’s operational expenditure and carbon footprint as a percentage of our fleet size.

Third, in response to the growing demand for AI capabilities, we’ve updated the thermal-mechanical design of our Gen 12 server to support more powerful GPUs. This aligns with the Workers AI objective to support larger large language models and increase throughput for smaller models. This enhancement underscores our ongoing commitment to advancing AI inference capabilities

Fourth, to underscore our security-first position as a company, we’ve integrated hardware root of trust (HRoT) capabilities to ensure the integrity of boot firmware and board management controller firmware. Continuing to embrace open standards, the baseboard management and security controller (Data Center Secure Control Module or OCP DC-SCM) that we’ve designed into our systems is modular and vendor-agnostic, enabling a unified openBMC image, quicker prototyping, and allowing for reuse.

Finally, given the increasing importance of supply assurance and reliability in infrastructure deployments, our approach includes a robust multi-vendor strategy to mitigate supply chain risks, ensuring continuity and resiliency of our infrastructure deployment.

Cloudflare is dedicated to constantly improving our server fleet, empowering businesses worldwide with enhanced performance, efficiency, and security.

Gen 12 Servers

Let’s take a closer look at our Gen 12 server. The server is powered by a 4th generation AMD EPYC Processor, paired with 384 GB of DDR5 RAM, 16 TB of NVMe storage, a dual-port 25 GbE NIC, and two 800 watt power supply units.

| Generation | Gen 12 Compute | Previous Gen 11 Compute |

|---|---|---|

| Form Factor | 2U1N – Single socket | 1U1N – Single socket |

| Processor | AMD EPYC 9684X Genoa-X 96-Core Processor | AMD EPYC 7713 Milan 64-Core Processor |

| Memory | 384GB of DDR5-4800 x12 memory channel |

384GB of DDR4-3200 x8 memory channel |

| Storage | x2 E1.S NVMe Samsung PM9A3 7.68TB / Micron 7450 Pro 7.68TB |

x2 M.2 NVMe 2x Samsung PM9A3 x 1.92TB |

| Network | Dual 25 Gbe OCP 3.0 Intel Ethernet Network Adapter E810-XXVDA2 / NVIDIA Mellanox ConnectX-6 Lx |

Dual 25 Gbe OCP 2.0 Mellanox ConnectX-4 dual-port 25G |

| System Management | DC-SCM 2.0 ASPEED AST2600 (BMC) + AST1060 (HRoT) |

ASPEED AST2500 (BMC) |

| Power Supply | 800W – Titanium Grade | 650W – Titanium Grade |

Cloudflare Gen 12 server

CPU

During the design phase, we conducted an extensive survey of the CPU landscape. These options offer valuable choices as we consider how to shape the future of Cloudflare’s server technology to match the needs of our customers. We evaluated many candidates in the lab, and short-listed three standout CPU candidates from the 4th generation AMD EPYC Processor lineup: Genoa 9654, Bergamo 9754, and Genoa-X 9684X for production evaluation. The table below summarizes the differences in specifications of the short-listed candidates for Gen 12 servers against the AMD EPYC 7713 used in our Gen 11 servers. Notably, all three candidates offer significant increase in core count and marked increase in all core boost clock frequency.

| CPU Model | AMD EPYC 7713 | AMD EPYC 9654 | AMD EPYC 9754 | AMD EPYC 9684X |

|---|---|---|---|---|

| Series | Milan | Genoa | Bergamo | Genoa-X |

| # of CPU Cores | 64 | 96 | 128 | 96 |

| # of Threads | 128 | 192 | 256 | 192 |

| Base Clock | 2.0 GHz | 2.4 GHz | 2.25 GHz | 2.4 GHz |

| Max Boost Clock | 3.67 GHz | 3.7 Ghz | 3.1 Ghz | 3.7 Ghz |

| All Core Boost Clock | 2.7 GHz * | 3.55 GHz | 3.1GHz | 3.42 GHz |

| Total L3 Cache | 256 MB | 384 MB | 256 MB | 1152 MB |

| L3 cache per core | 4MB / core | 4MB / core | 2MB / core | 12MB / core |

| Maximum configurable TDP | 240W | 400W | 400W | 400W |

*Note: AMD EPYC 7713 all core boost clock frequency of 2.7 GHz is not an official specification of the CPU but based on data collected at Cloudflare production fleet.

During production evaluation, the configuration of all three CPUs were optimized to the best of our knowledge, including thermal design power (TDP) configured to 400W for maximum performance. The servers are set up to run the same processes and services like any other server we have in production, which makes for a great side-by-side comparison.

| Milan 7713 | Genoa 9654 | Bergamo 9754 | Genoa-X 9684X | |

|---|---|---|---|---|

| Production performance (request per second) multiplier | 1x | 2x | 2.15x | 2.45x |

| Production efficiency (request per second per watt) multiplier | 1x | 1.33x | 1.38x | 1.63x |

AMD EPYC Genoa-X in Cloudflare Gen 12 server

Each of these CPUs outperforms the previous generation of processors by at least 2x. AMD EPYC 9684X Genoa-X with 3D V-cache technology gave us the greatest performance improvement, at 2.45x, when compared against our Gen 11 servers with AMD EPYC 7713 Milan.

Comparing the performance between Genoa-X 9684X and Genoa 9654, we see a ~22.5% performance delta. The primary difference between the two CPUs is the amount of L3 cache available on the CPU. Genoa-X 9684X has 1152 MB of L3 cache, which is three times the Genoa 9654 with 384 MB of L3 cache. Cloudflare workloads benefit from more low level cache being accessible and avoid the much larger latency penalty associated with fetching data from memory.

Genoa-X 9684X CPU delivered ~22.5% improved performance consuming the same amount of 400W power compared to Genoa 9654. The 3x larger L3 cache does consume additional power, but only at the expense of sacrificing 3% of highest achievable all core boost frequency on Genoa-X 9684X, a favorable trade-off for Cloudflare workloads.

More importantly, Genoa-X 9684X CPU delivered 145% performance improvement with only 50% system power increase, offering a 63% power efficiency improvement that will help drive down operational expenditure tremendously. It is important to note that even though a big portion of the power efficiency is due to the CPU, it needs to be paired with optimal thermal-mechanical design to realize the full benefit. Earlier last year, we made the thermal-mechanical design choice to double the height of the server chassis to optimize rack density and cooling efficiency across our global data centers. We estimated that moving from 1U to 2U would reduce fan power by 150W, which would decrease system power from 750 watts to 600 watts. Guess what? We were right — a Gen 12 server consumes 600 watts per system at a typical ambient temperature of 25°C.

While high performance often comes at a higher price, fortunately AMD EPYC 9684X offer an excellent balance between cost and capability. A server designed with this CPU provides top-tier performance without necessitating a huge financial outlay, resulting in a good Total Cost of Ownership improvement for Cloudflare.

Memory

AMD Genoa-X CPU supports twelve memory channels of DDR5 RAM up to 4800 mega transfers per second (MT/s) and per socket Memory Bandwidth of 460.8 GB/s. The twelve channels are fully utilized with 32 GB ECC 2Rx8 DDR5 RDIMM with one DIMM per channel configuration for a combined total memory capacity of 384 GB.

Choosing the optimal memory capacity is a balancing act, as maintaining an optimal memory-to-core ratio is important to make sure CPU capacity or memory capacity is not wasted. Some may remember that our Gen 11 servers with 64 core AMD EPYC 7713 CPUs are also configured with 384 GB of memory, which is about 6 GB per core. So why did we choose to configure our Gen 12 servers with 384 GB of memory when the core count is growing to 96 cores? Great question! A lot of memory optimization work has happened since we introduced Gen 11, including some that we blogged about, like Bot Management code optimization and our transition to highly efficient Pingora. In addition, each service has a memory allocation that is sized for optimal performance. The per-service memory allocation is programmed and monitored utilizing Linux control group resource management features. When sizing memory capacity for Gen 12, we consulted with the team who monitor resource allocation and surveyed memory utilization metrics collected from our fleet. The result of the analysis is that the optimal memory-to-core ratio is 4 GB per CPU core, or 384 GB total memory capacity. This configuration is validated in production. We chose dual rank memory modules over single rank memory modules because they have higher memory throughput, which improves server performance (read more about memory module organization and its effect on memory bandwidth).

The table below shows the result of running the Intel Memory Latency Checker (MLC) tool to measure peak memory bandwidth for the system and to compare memory throughput between 12 channels of dual-rank (2Rx8) 32 GB DIMM and 12 channels of single rank (1Rx4) 32 GB DIMM. Dual rank DIMMs have slightly higher (1.8%) read memory bandwidth, but noticeably higher write bandwidth. As write ratios increased from 25% to 50%, the memory throughput delta increased by 10%.

| Benchmark | Dual rank advantage over single rank |

|---|---|

| Intel MLC ALL Reads | 101.8% |

| Intel MLC 3:1 Reads-Writes | 107.7% |

| Intel MLC 2:1 Reads-Writes | 112.9% |

| Intel MLC 1:1 Reads-Writes | 117.8% |

| Intel MLC Stream-triad like | 108.6% |

The table below shows the result of running the AMD STREAM benchmark to measure sustainable main memory bandwidth in MB/s and the corresponding computation rate for simple vector kernels. In all 4 types of vector kernels, dual rank DIMMs provide a noticeable advantage over single rank DIMMs.

| Benchmark | Dual rank advantage over single rank |

|---|---|

| Stream Copy | 115.44% |

| Stream Scale | 111.22% |

| Stream Add | 109.06% |

| Stream Triad | 107.70% |

Storage

Cloudflare’s Gen X server and Gen 11 server support M.2 form factor drives. We liked the M.2 form factor mainly because it was compact. The M.2 specification was introduced in 2012, but today, the connector system is dated and the industry has concerns about its ability to maintain signal integrity with the high speed signal specified by PCIe 5.0 and PCIe 6.0 specifications. The 8.25W thermal limit of the M.2 form factor also limits the number of flash dies that can be fitted, which limits the maximum supported capacity per drive. To address these concerns, the industry has introduced the E1.S specification and is transitioning from the M.2 form factor to the E1.S form factor.

In Gen 12, we are making the change to the EDSFF E1 form factor, more specifically the E1.S 15mm. E1.S 15mm, though still in a compact form factor, provides more space to fit more flash dies for larger capacity support. The form factor also has better cooling design to support more than 25W of sustained power.

While the AMD Genoa-X CPU supports 128 PCIe 5.0 lanes, we continue to use NVMe devices with PCIe Gen 4.0 x4 lanes, as PCIe Gen 4.0 throughput is sufficient to meet drive bandwidth requirements and keep server design costs optimal. The server is equipped with two 8 TB NVMe drives for a total of 16 TB available storage. We opted for two 8 TB drives instead of four 4 TB drives because the dual 8 TB configuration already provides sufficient I/O bandwidth for all Cloudflare workloads that run on each server.

| Sequential Read (MB/s) : | 6,700 |

|---|---|

| Sequential Write (MB/s) : | 4,000 |

| Random Read IOPS: | 1,000,000 |

| Random Write IOPS: | 200,000 |

| Endurance | 1 DWPD |

| PCIe GEN4 x4 lane throughput | 7880 MB/s |

Storage devices performance specification

Network

Cloudflare servers and top-of-rack (ToR) network equipment operate at 25 GbE speeds. In Gen 12, we utilized a DC-MHS motherboard-inspired design, and upgraded from an OCP 2.0 form factor to an OCP 3.0 form factor, which provides tool-less serviceability of the NIC. The OCP 3.0 form factor also occupies less space in the 2U server compared to PCIe-attached NICs, which improves airflow and frees up space for other application-specific PCIe cards, such as GPUs.

Cloudflare has been using the Mellanox CX4-Lx EN dual port 25 GbE NIC since our Gen 9 servers in 2018. Even though the NIC has served us well over the years, we are single sourced. During the pandemic, we were faced with supply constraints and extremely long lead times. The team scrambled to qualify the Broadcom M225P dual port 25 GbE NIC as our second-sourced NIC in 2022, ensuring we could continue to turn up servers to serve customer demand. With the lessons learned from single-sourcing the Gen 11 NIC, we are now dual-sourcing and have chosen the Intel Ethernet Network Adapter E810 and NVIDIA Mellanox ConnectX-6 Lx to support Gen 12. These two NICs are compliant with the OCP 3.0 specification and offer more MSI-X queues that can then be mapped to the increased core count on the AMD EPYC 9684X. The Intel Ethernet Network Adapter comes with an additional advantage, offering full Generic Segmentation Offload (GSO) support including VLAN-tagged encapsulated traffic, whereast many vendors either only support Partial GSO or do not support it at all today. With Full GSO support, the kernel spent noticeably less time in softirq segmenting packets, and servers with Intel E810 NICs are processing approximately 2% more requests per second.

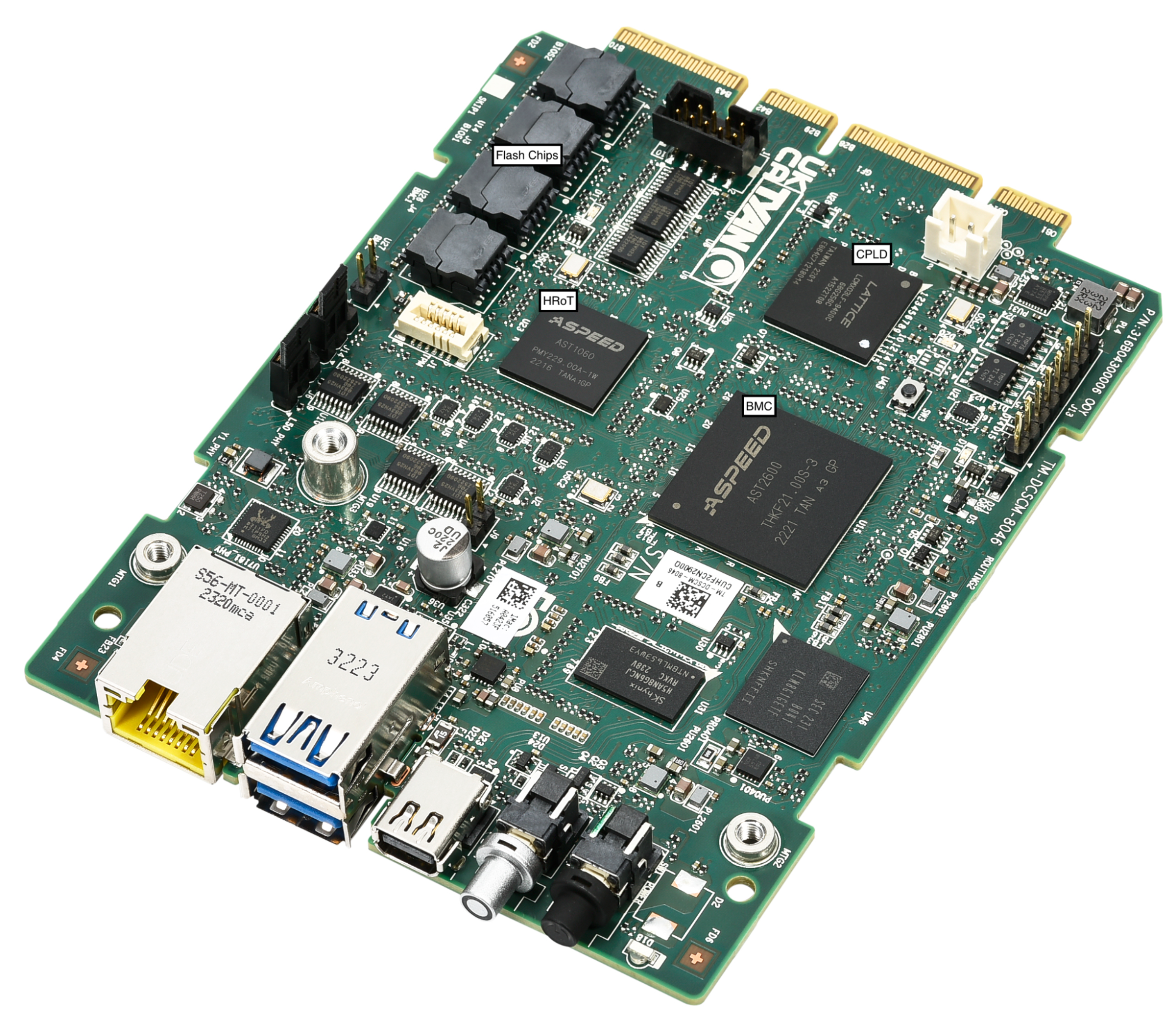

Improved security with DC-SCM: Project Argus

DC-SCM in Gen 12 server (Project Argus)

Gen 12 servers are integrated with Project Argus, one of the industry first implementations of Data Center Secure Control Module 2.0 (DC-SCM 2.0). DC-SCM 2.0 decouples server management and security functions away from the motherboard. The baseboard management controller (BMC), hardware root of trust (HRoT), trusted platform module (TPM), and dual BMC/BIOS flash chips are all installed on the DC-SCM.

On our Gen X and Gen 11 server, Cloudflare moved our secure boot trust anchor from the system Basic Input/Output System (BIOS) or the Unified Extensible Firmware Interface (UEFI) firmware to hardware-rooted boot integrity — AMD’s implementation of Platform Secure Boot (PSB) or Ampere’s implementation of Single Domain Secure Boot. These solutions helped secure Cloudflare infrastructure from BIOS / UEFI firmware attacks. However, we are still vulnerable to out-of-band attacks through compromising the BMC firmware. BMC is a microcontroller that provides out-of-band monitoring and management capabilities for the system. When compromised, attackers can read processor console logs accessible by BMC and control server power states for example. On Gen 12, the HRoT on the DC-SCM serves as the trust store of cryptographic keys and is responsible to authenticate the BIOS/UEFI firmware (independent of CPU vendor) and the BMC firmware for secure boot process.

In addition, on the DC-SCM, there are additional flash storage devices to enable storing back-up BIOS/UEFI firmware and BMC firmware to allow rapid recovery when a corrupted or malicious firmware is programmed, and to be resilient to flash chip failure due to aging.

These updates make our Gen 12 server more secure and more resilient to firmware attacks.

Power

A Gen 12 server consumes 600 watts at a typical ambient temperature of 25°C. Even though this is a 50% increase from the 400 watts consumed by the Gen 11 server, as mentioned above in the CPU section, this is a relatively small price to pay for a 145% increase in performance. We’ve paired the server up with dual 800W common redundant power supplies (CRPS) with 80 PLUS Titanium grade efficiency. Both power supply units (PSU) operate actively with distributed power and current. The units are hot-pluggable, allowing the server to operate with redundancy and maximize uptime.

80 PLUS is a PSU efficiency certification program. The Titanium grade efficiency PSU is 2% more efficient than the Platinum grade efficiency PSU between typical operating load of 25% to 50%. 2% may not sound like a lot, but considering the size of Cloudflare fleet with servers deployed worldwide, 2% savings over the lifetime of all Gen 12 deployment is a reduction of more than 7 GWh, equivalent to carbon sequestered by more than 3400 acres of U.S. forests in one year. This upgrade also means our Gen 12 server complies with EU Lot9 requirements and can be deployed in the EU region.

| 80 PLUS certification | 10% | 20% | 50% | 100% |

|---|---|---|---|---|

| 80 PLUS Platinum | – | 92% | 94% | 90% |

| 80 PLUS Titanium | 90% | 94% | 96% | 91% |

Drop-in GPU support

Demand for machine learning and AI workloads exploded in 2023, and Cloudflare introduced Workers AI to serve the needs of our customers. Cloudflare retrofitted or deployed GPUs worldwide in a portion of our Gen 11 server fleet to support the growth of Workers AI. Our Gen 12 server is also designed to accommodate the addition of more powerful GPUs. This gives Cloudflare the flexibility to support Workers AI in all regions of the world, and to strategically place GPUs in regions to reduce inference latency for our customers. With this design, the server can run Cloudflare’s full software stack. During times when GPUs see lower utilization, the server continues to serve general web requests and remains productive.

The electrical design of the motherboard is designed to support up to two PCIe add-in cards and the power distribution board is sized to support an additional 400W of power. The mechanics are sized to support either a single FHFL (full height, full length) double width GPU PCIe card, or two FHFL single width GPU PCIe cards. The thermal solution including the component placement, fans, and air duct design are sized to support adding GPUs with TDP up to 400W.

Looking to the future

Gen 12 Servers are currently deployed and live in multiple Cloudflare data centers worldwide, and already process millions of requests per second. Cloudflare’s EPYC journey has not ended — the 5th-gen AMD EPYC CPUs (code name “Turin”) are already available for testing, and we are very excited to start the architecture planning and design discussion for the Gen 13 server. Come join us at Cloudflare to help build a better Internet!